Google’s algorithm is under constant evolution, which is clearly reflected in its long history of updates. The last one, known as Bidirectional Encoder Representations from Transformers (BERT), is the most significant development in the interpretation and understanding of searches on Google Search since the launch of RankBrain.

Google BERT is the new natural language processing model that will enable the search engine to understand human language, both written and spoken. The Mountain View company aims to be able to answer the most complex queries, those whose search intention lies in the comprehension of the entire sentence and not just the keyword.

How does Google BERT work?

Traditionally, Google has based its search algorithm merely on the analysis of the keywords used. In other words, when someone made a query, Google would crawl through its databases until it found the content that most closely matched the keywords.

With BERT, this changes. The individual meaning of each word takes a back seat, and it is the meaning of the sentence as a whole that the algorithm prioritizes. Therefore, Google will continue to analyze the words, but it will do so collectively, taking into account the context in which they are used.

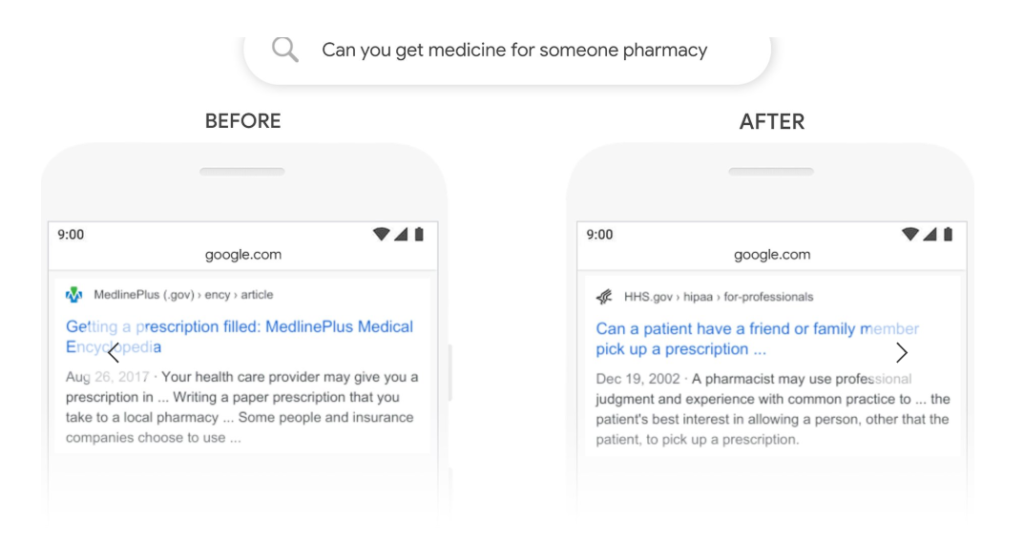

In the following example, which Google itself posts on its official blog, shows how BERT works:

However, how is Google BERT able to understand the real meaning of a sentence? To answer this question, we take a closer look at the meaning of two of the concepts that are part of the BERT acronym:

1. (B)idirectional

BERT analyzes the text in both directions: it takes into account the words on the left and right of each keyword. This way, by relating all the words to each other, it can process a much deeper context. This is a matter of global semantic interpretation.

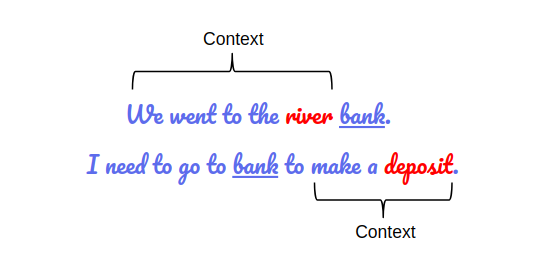

To explain in more depth, we take a representative example from Analytics Vidhya. As seen in the sentence below, the meaning of the word “bank” changes according to the context of the sentence. Although “bank” is the keyword, BERT will take into account the surrounding words to give a more accurate answer to the search intention.

2. (T)ransformers

Transformers are a neural network of Natural Language Processing (NLP) models. Google has been using this type of artificial intelligence with RankBrain for a while, but it has now evolved. Unlike the NLP of 2015, which had a textual function, BERT’s NLP works as a system that assigns to help the algorithm understand certain words, such as nexuses, pronouns or prepositions.

In human language, connectors or links are indispensable to give meaning to the message. Before, Google avoided them and focused on individualized keywords. With BERT, now the meaning of each word inserted into the search engine depends on the connectors that complement it.

Matías Pérez Candal, Strategy Director at Labelium Spain

The more ambiguous and long-tail searches will benefit tremendously, given that the connectors are able to give meaning to words that, individually, the algorithm could not understand correctly. Therefore, the results will be more relevant and accurate to what the user is looking for.

How to focus a SEO optimization with Google BERT

In fact, Google BERT affects users’ understanding of searches. Considering the rationale behind this update (address searches in a more humane way), it is possible to use the origin principle to attract higher-quality traffic.

As Neil Patel comments on his blog: the important factor is that the user, not the search engine, perceives valuable content. Therefore, it is advisable to keep the following guidelines in mind:

- The content should be more communicative. The texts have to be oriented towards people and not towards the search engine, making it very convenient to write in a natural style.

- The website must be structured in a way that the search intention is prioritized. Keywords are still important, but they are no longer the ultimate goal of the text. Now there must be a reason for adding them and not only the number of times they are repeated throughout the text will be rewarded.

- It is essential to give a real and useful answer to the queries. If Google BERT can understand the meaning of the search and the intention behind it, it is logical to think that the content will reach a more segmented, but more like-minded audience.

Will BERT change the way we understand SEO in 2020? Much remains to be explored, although the direction the industry is taking promises an inevitable transformation towards a more user-centric view on SEO strategies.